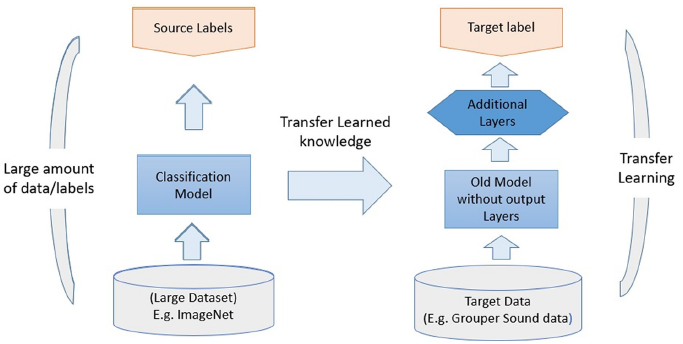

Transfer Learning is a powerful technique in Machine Learning that leverages pre-existing knowledge from one task or domain to improve performance on a new, related task. In this blog post, we will discuss the concept of Transfer Learning, its advantages, and its applications.

What is Transfer Learning?

- Transfer Learning is a Machine Learning approach that utilizes knowledge from a pretrained model, trained on a related task or domain, to improve performance on a new task with limited training data.

- The primary goal of Transfer Learning is to accelerate model development and enhance performance by leveraging existing knowledge.

Benefits of Transfer Learning:

- Reduced Training Time: Transfer Learning allows for faster model training, as the initial model has already learned valuable features or representations from the source task.

- Improved Performance: Models using Transfer Learning often achieve better performance compared to models trained from scratch, particularly when training data is limited.

- Adaptability: Transfer Learning enables models to adapt to new tasks or domains with minimal retraining, making it a valuable technique in various real-world applications.

Types of Transfer Learning:

- Inductive Transfer Learning: Leveraging knowledge from a source task to improve performance on a target task within the same domain.

- Transductive Transfer Learning: Applying knowledge from a source domain to a target domain, while the task remains the same.

- Unsupervised Transfer Learning: Utilizing unsupervised knowledge from a source domain or task to improve performance on a target task or domain.

Author: Ali K. Ibrahim

Applications of Transfer Learning:

- Image Recognition: Fine-tuning pretrained Convolutional Neural Networks (CNNs) on new image classification tasks with limited labeled data.

- Natural Language Processing: Adapting pretrained language models, such as BERT or GPT, for downstream tasks like sentiment analysis or text classification.

- Reinforcement Learning: Utilizing knowledge from a pretrained agent to accelerate learning in a new environment or task.

Challenges and Future Directions:

- Negative Transfer: Ensuring that transferring knowledge from the source task does not hurt the performance of the target task.

- Domain Adaptation: Identifying and overcoming differences between the source and target domains to ensure effective knowledge transfer.

- Developing techniques to transfer knowledge across different model architectures or learning paradigms, such as from supervised to unsupervised learning.

0 Comments