Long Short-Term Memory (LSTM) networks are a type of Recurrent Neural Network designed to address the limitations of traditional RNNs, particularly in learning long-term dependencies. In this blog post, we will discuss the architecture of LSTMs, their key components, and their applications.

What are Long Short-Term Memory Networks?

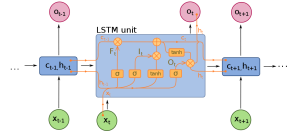

- LSTMs are a variant of RNNs that use specialized memory cells to store and manipulate information, enabling them to learn long-term dependencies more effectively than traditional RNNs.

- They utilize a unique gating mechanism that controls the flow of information within the memory cells, ensuring that relevant information is retained and irrelevant information is discarded.

Key Components of LSTMs:

- Memory Cells: Specialized units that store and manipulate information, allowing the network to capture long-term dependencies.

- Input, Forget, and Output Gates: Mechanisms that control the flow of information into, within, and out of the memory cells, ensuring that the LSTM can selectively remember and forget information as needed.

Applications of Long Short-Term Memory Networks:

- Natural Language Processing: Tasks such as sentiment analysis, text summarization, and machine translation benefit from LSTMs’ ability to capture long-range dependencies within text data.

- Time Series Forecasting: LSTMs can model complex temporal patterns and long-term dependencies in time series data, making them suitable for tasks like stock market prediction or energy consumption forecasting.

- Sequence-to-Sequence Learning: LSTMs can be used for tasks that involve mapping input sequences to output sequences, such as speech recognition or video captioning.

0 Comments